How Accurate Is AI at Mimicking Art Styles? Here's What Our Study Found

This section presents the results of a user study on style mimicry, focusing on quality vs. style fit, and providing artist-specific success rates and inter-annotator agreement.

Table of Links

Discussion and Broader Impact, Acknowledgements, and References

D. Differences with Glaze Finetuning

H. Existing Style Mimicry Protections

C Detailed Results

C.1 Mimicry Quality Versus Style

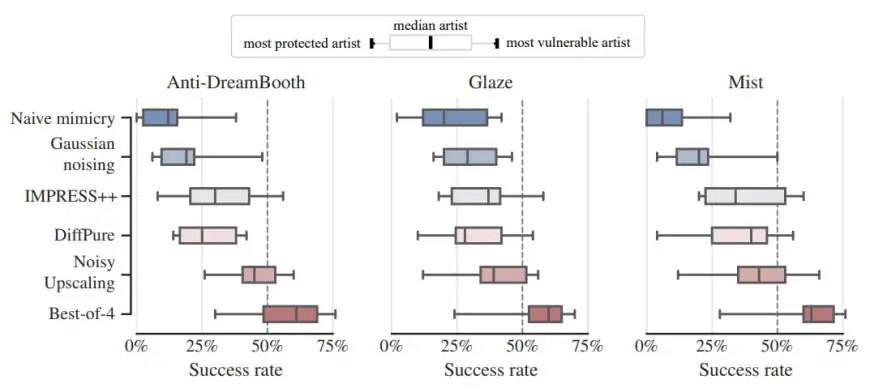

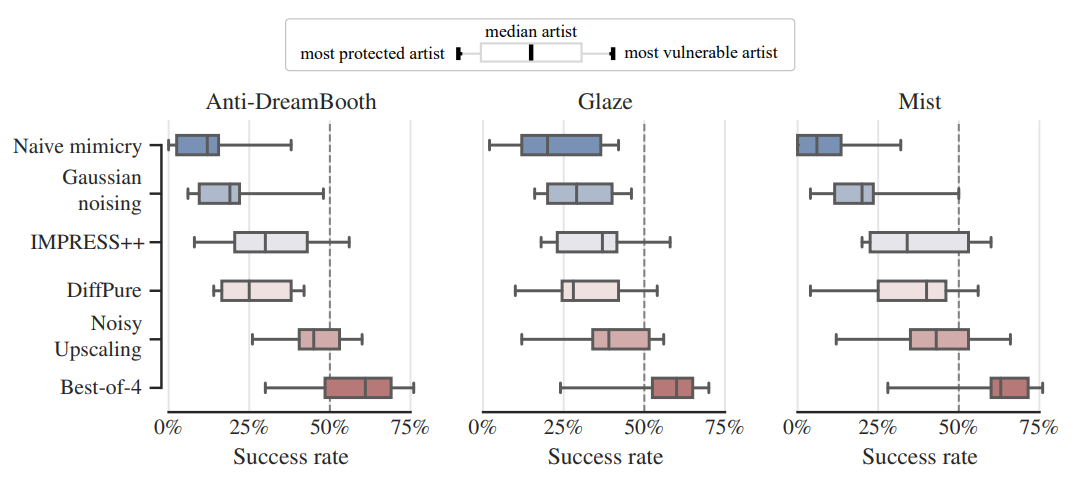

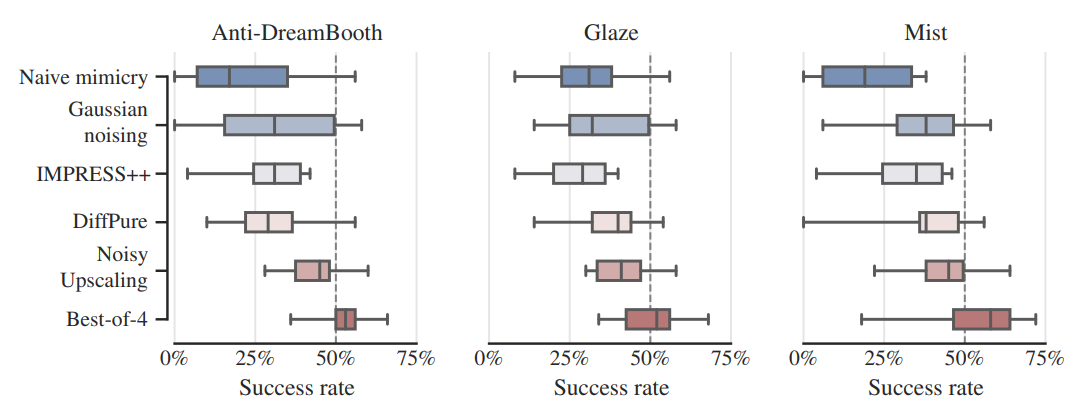

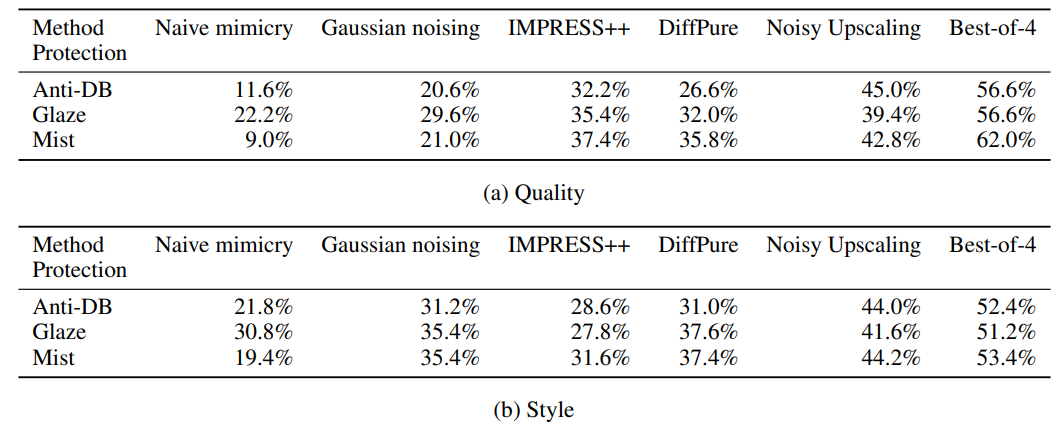

This section includes the detailed results from our user study. As mentioned in Section 5, we ask users to assess quality and stylistic fit separately in our study. Figure 16 and 17 show the results for each of these evaluations separately (the results in the main body represent the average of the two). Finally, Table 1 includes numerical results for each scenario.

\

\

\

C.2 Results Broken Down per Artist

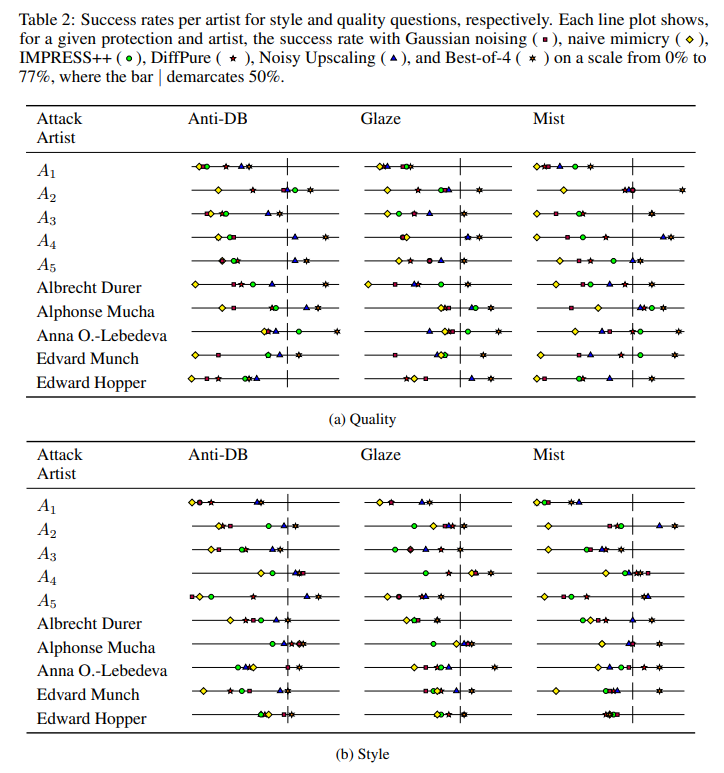

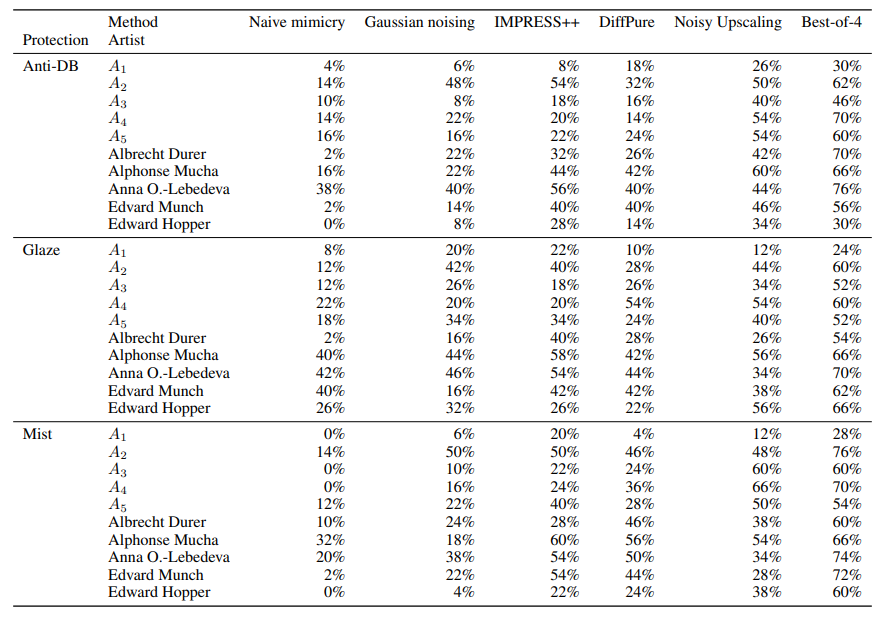

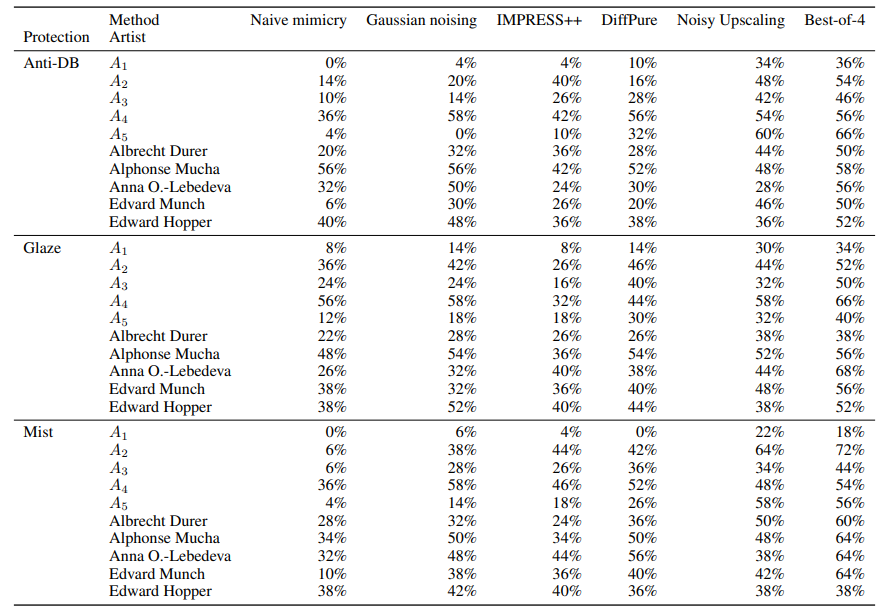

We present next the results obtained for each artist in each scenario. Table 2 plots the success rate for each method against each protection for all artists, and Table 3 includes the detailed success rates.

\

\ Table 3: User preference ratings of all style mimicry scenarios S ∈ M for each artist A ∈ A by name. Each cell states the percentage of votes that prefer an image generated under the corresponding scenario S and artist A ∈ A over a matching image generated under clean style mimicry. Higher percentages indicate weaker attacks or better defenses.

\

\

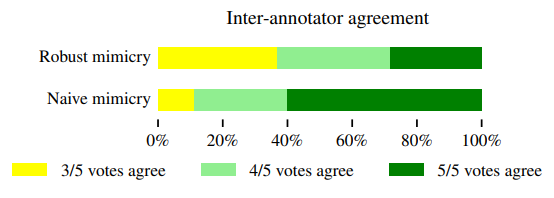

C.3 Inter-Annotator Agreement

\

:::info Authors:

(1) Robert Honig, ETH Zurich (robert.hoenig@inf.ethz.ch);

(2) Javier Rando, ETH Zurich (javier.rando@inf.ethz.ch);

(3) Nicholas Carlini, Google DeepMind;

(4) Florian Tramer, ETH Zurich (florian.tramer@inf.ethz.ch).

:::

:::info This paper is available on arxiv under CC BY 4.0 license.

:::

\

What's Your Reaction?