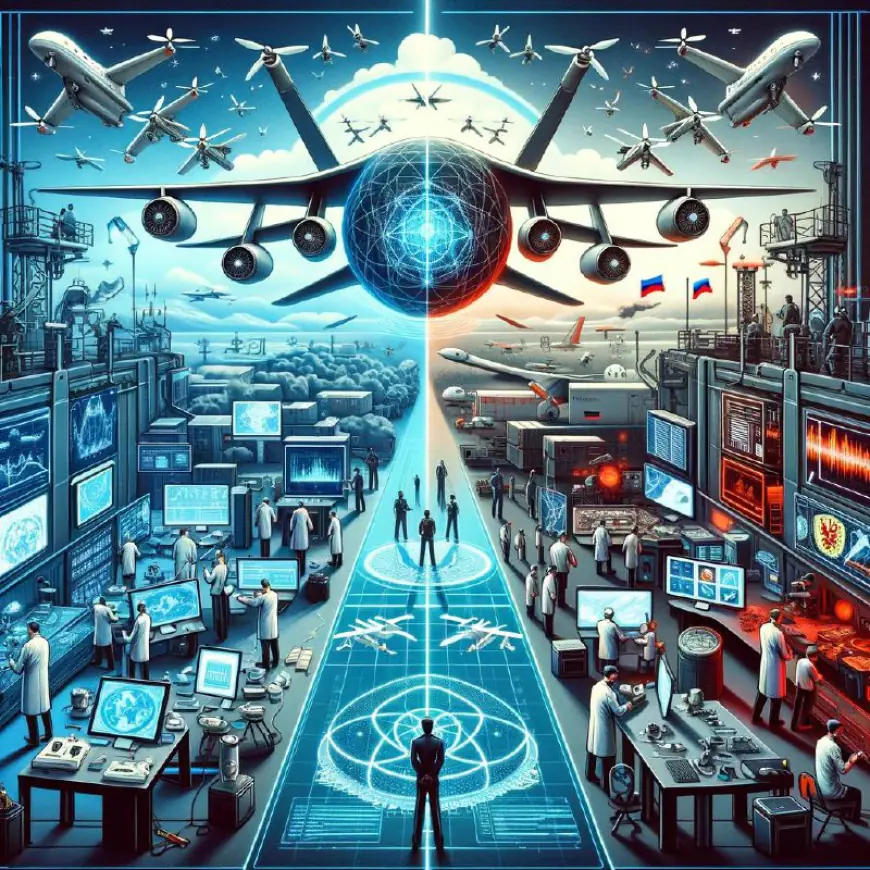

The Race for AI-Enabled Drones: Russia’s Aggressive Approach vs. U.S. Caution

The ongoing conflict in Ukraine has sparked intense debate about the use of artificial intelligence (AI) in military operations. While both Ukrainian and Russian forces have harnessed AI for decision-making and data analysis, there are notable differences in their approaches. Russian innovation Russia has embraced AI in its military operations, leveraging a thriving ecosystem of […]

The ongoing conflict in Ukraine has sparked intense debate about the use of artificial intelligence (AI) in military operations. While both Ukrainian and Russian forces have harnessed AI for decision-making and data analysis, there are notable differences in their approaches.

Russian innovation

Russia has embraced AI in its military operations, leveraging a thriving ecosystem of funding, manufacturing, and volunteer-driven efforts. These volunteers tap into commercial off-the-shelf technologies to develop AI-enabled drones and combat systems, often with support from the Russian government and private entities. One example is the Shturm 1.2 heavy quadcopter drone, which claims to utilize AI for independent decision-making and targeting.

Plausibility and analysis

The plausibility of Russian claims regarding AI-enabled drones is a subject of debate. However, the need for autonomous drones, capable of operating independently due to electronic warfare threats, is acknowledged. Russian volunteer organizations compete with Ukrainian counterparts in developing such technology, highlighting the global significance of AI in warfare.

American caution

In contrast to Russia, the United States takes a more deliberate approach to AI in military operations. The focus lies in addressing technology acquisition challenges, data integration, and ensuring ethical AI principles. While Russia tests commercial systems in live military operations, the U.S. emphasizes the development of a robust AI assurance framework.

Balancing assurance and urgency

The U.S. faces a challenge in balancing the urgency to deploy AI-enabled systems with the need for rigorous testing and assurance. Department of Defense Directive 3000.09 imposes strict requirements on autonomous weapon systems, emphasizing safety and reliability. This cautious approach may be insufficient in times of immediate conflict.

The conflict in Ukraine has propelled both sides to embrace AI technology for military drones. Russia’s aggressive approach, relying on commercial technologies and volunteer efforts, stands in contrast to the U.S.’s cautious strategy. The rapid integration of unvetted technologies into military operations raises questions about the U.S.’s readiness. To maintain technological superiority while upholding democratic values, the U.S. must channel leadership, resources, and infrastructure towards AI assurance.

The race for AI-enabled drones in the military landscape is intensifying, with Russia’s aggressive approach contrasting the cautious stance of the United States. The conflict in Ukraine serves as a crucible for innovation in this field, but questions about safety, reliability, and ethical considerations remain. As the battle for technological superiority continues, the U.S. must navigate the fine line between urgency and assurance to maintain its competitive edge.

What's Your Reaction?