OpenAIs Sora first look: YouTuber Marques Brownlee breaks down the problems with the AI video model

Marques Brownlee got to use OpenAI's Sora before anyone else. Here are his thoughts on the AI video generator.

One of the most highly-anticipated AI-related products has just arrived: OpenAI's AI video generator Sora launched on Monday as part of the company's 12 Days of OpenAI event.

OpenAI has provided sneak peeks at Sora's output in the past. But, how different is it at launch? OpenAI has certainly been hard at work to update and improve its AI video generator in preparation for its public launch.

YouTuber Marques Brownlee had a first look at Sora, releasing his video review of the latest OpenAI product hours before OpenAI even officially announced the launch. What did Brownlee think?

What Sora is good at

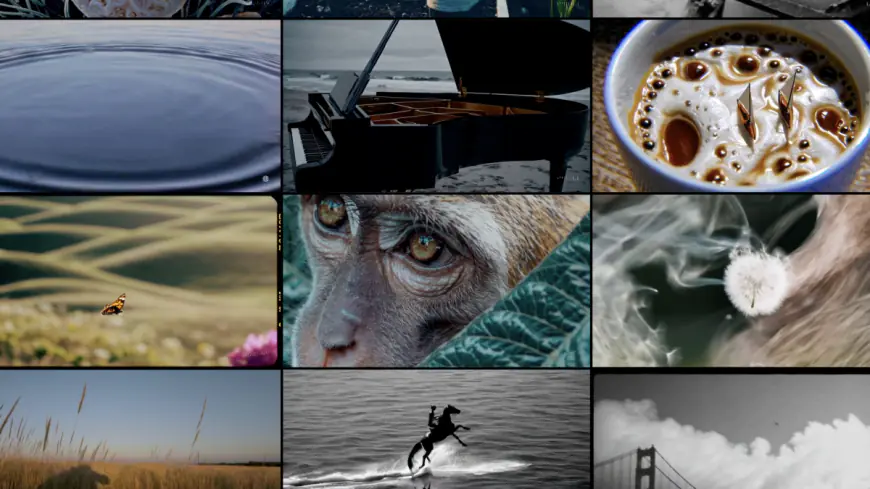

According to Brownlee, his Sora testing found that the AI video generator excels at creating landscapes. AI generated overhead, drone-like shots of nature or famous landscapes look just like real-life stock footage. Of course, as Brownlee points out, if you are specifically well-versed in how the surroundings of a landmark look, one might be able to spot the differences. However, there's not too much that looks distinctly AI-generated in these types of Sora-created clips.

Perhaps the type of video Sora is best able to create, according to Brownlee, are abstract videos. Background or screensaver type abstract art can be made quite well by Sora even with specific instructions.

Brownlee also found that Sora-generated certain types of animated content, like stop-motion or claymation type animation, look passable at times as the sometimes jerky movements that still plague AI video look like stylistic choices.

Most surprisingly, Brownlee found that Sora was able to handle very specific animated text visuals. Words often show up as garbled text in other AI image and video generation models. With Sora, Brownlee found that as long as the text was specific, say a few words on title card, Sora was able to generate the visual with correct spelling.

Where Sora goes wrong

Sora, however, still presents many of the same problems that all AI video generators that came before it have struggled with.

The first thing Brownlee mentions is object permanence. Sora has issues with displaying, say, a specific object in an individual's hand throughout the runtime of the video. Sometimes the object will move or just suddenly disappear. Just like with AI text, Sora's AI video suffers from hallucinations.

Which brings Brownlee to Sora's biggest problem: Physics in general. Photorealistic video seems to be quite challenging for Sora because it can't just seem to get movement down right. A person simply walking will start slowing down or speeding up in unnatural ways. Body parts or objects will suddenly warp into something completely different at times as well.

And, while Brownlee did mention those improvements with text, unless you are getting very specific, Sora still garbles the spelling of any sort of background text like you might see on buildings or street signs.

Sora is very much an ongoing work, as OpenAI shared during the launch. While it may offer a step up from other AI video generators, it's clear that there are just some areas where all AI video models are going to find challenging.

What's Your Reaction?